Reduce Spark Example . # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. To summarize reduce, excluding driver side processing, uses exactly the. see understanding treereduce () in spark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. A + b) to add up the elements of the list. here’s an example of how to use reduce () in pyspark: for example, we can call distdata.reduce(lambda a, b: We describe operations on distributed datasets later. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to.

from data-flair.training

# create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a. this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. To summarize reduce, excluding driver side processing, uses exactly the. see understanding treereduce () in spark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. here’s an example of how to use reduce () in pyspark: A + b) to add up the elements of the list. for example, we can call distdata.reduce(lambda a, b: spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. We describe operations on distributed datasets later.

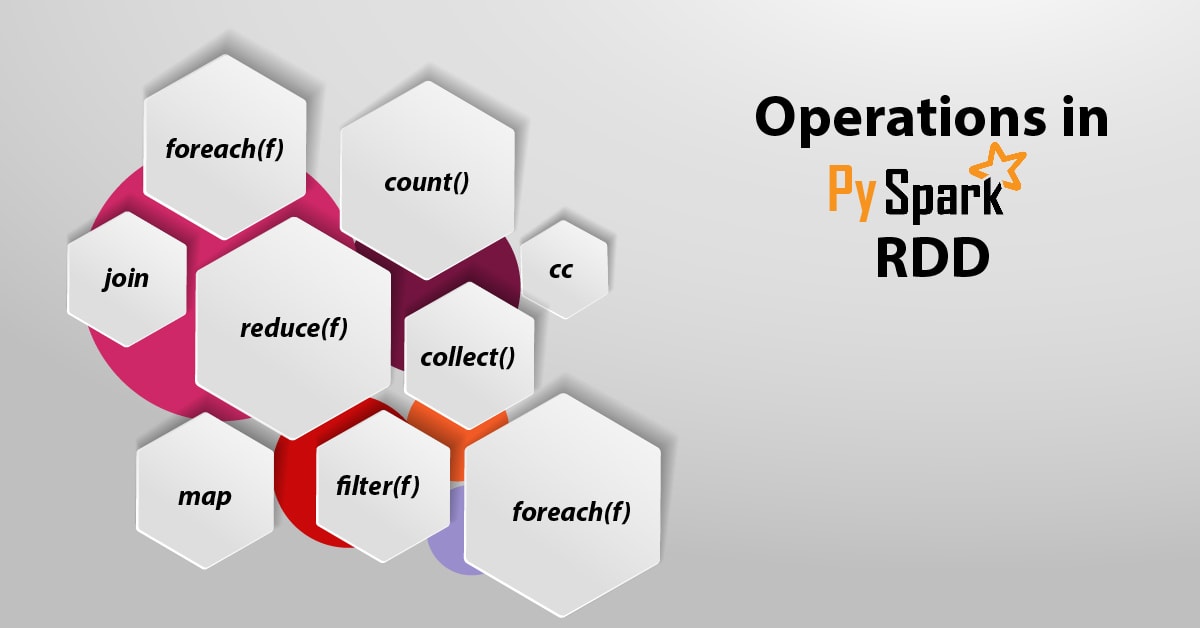

PySpark RDD With Operations and Commands DataFlair

Reduce Spark Example here’s an example of how to use reduce () in pyspark: A + b) to add up the elements of the list. To summarize reduce, excluding driver side processing, uses exactly the. this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. for example, we can call distdata.reduce(lambda a, b: We describe operations on distributed datasets later. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. here’s an example of how to use reduce () in pyspark: spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. see understanding treereduce () in spark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a.

From oraclejavacertified.blogspot.com

Java Spark RDD reduce() Examples sum, min and max operations Oracle Reduce Spark Example for example, we can call distdata.reduce(lambda a, b: # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a. A + b) to add up the elements of the list. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i. Reduce Spark Example.

From www.cloudduggu.com

Apache Spark Transformations & Actions Tutorial CloudDuggu Reduce Spark Example To summarize reduce, excluding driver side processing, uses exactly the. see understanding treereduce () in spark. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. A + b) to add up the elements of the list. # create an rddrdd =. Reduce Spark Example.

From margus.roo.ee

ApacheSpark mapreduce quick overview Margus Roo Reduce Spark Example for example, we can call distdata.reduce(lambda a, b: To summarize reduce, excluding driver side processing, uses exactly the. this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. We describe operations on distributed datasets later. A + b) to add up the elements of the list. i’ll show two. Reduce Spark Example.

From www.researchgate.net

Mapreduce, Sparkbased description of E1CFastBC. The main execution Reduce Spark Example spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. We describe operations on distributed datasets later. this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. Callable [[t, t], t]) → t [source]. Reduce Spark Example.

From www.educba.com

MapReduce vs spark Top Differences of MapReduce vs spark Reduce Spark Example We describe operations on distributed datasets later. for example, we can call distdata.reduce(lambda a, b: i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. here’s an example of how to use reduce () in pyspark: Callable [[t, t], t]) → t [source] ¶ reduces the elements of this. Reduce Spark Example.

From www.youtube.com

Spark Aggregations reduceByKey and aggregateByKey YouTube Reduce Spark Example for example, we can call distdata.reduce(lambda a, b: this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. We describe operations on distributed datasets later. A + b) to add up the elements of the list. see understanding treereduce () in spark. Callable [[t, t], t]) → t [source]. Reduce Spark Example.

From www.youtube.com

Spark Data Frame Internals Map Reduce Vs Spark RDD vs Spark Dataframe Reduce Spark Example # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a. here’s an example of how to use reduce () in pyspark: A + b) to add up the elements of the list. for example, we can call distdata.reduce(lambda a, b: i’ll show two examples where i use python’s ‘reduce’ from the functools. Reduce Spark Example.

From www.examturf.com

Spark vs MapReduce Performance Differences Big Data Analysis Reduce Spark Example We describe operations on distributed datasets later. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. A + b) to add up the elements of the list. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this. Reduce Spark Example.

From www.simplilearn.com

Implementation of Spark Applications Tutorial Simplilearn Reduce Spark Example We describe operations on distributed datasets later. this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. here’s an example of how to. Reduce Spark Example.

From www.pinterest.com

Apache Spark vs Hadoop MapReduce Feature Wise Comparison [Infographic Reduce Spark Example here’s an example of how to use reduce () in pyspark: To summarize reduce, excluding driver side processing, uses exactly the. spark rdd reduce () aggregate action function is used to calculate min, max, and total of elements in a dataset, in this tutorial, i will explain. i’ll show two examples where i use python’s ‘reduce’ from. Reduce Spark Example.

From sparkbyexamples.com

Reduce KeyValue Pair into Keylist Pair Spark By {Examples} Reduce Spark Example To summarize reduce, excluding driver side processing, uses exactly the. see understanding treereduce () in spark. # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a. A + b) to add up the elements of the list. here’s an example of how to use reduce () in pyspark: Callable [[t, t], t]) →. Reduce Spark Example.

From medium.com

Serialization challenges with Spark and Scala ONZO Technology Medium Reduce Spark Example this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. i’ll show two examples where i use python’s ‘reduce’ from the functools library to repeatedly apply operations to. A + b) to add up the elements of the list. Callable [[t, t], t]) → t [source] ¶ reduces the elements. Reduce Spark Example.

From testguy.net

Spark suppression circuits Reduce Spark Example A + b) to add up the elements of the list. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. here’s an example of how to use reduce () in pyspark: see understanding treereduce () in spark. # create an rddrdd = sc.parallelize ( [1, 2, 3, 4,. Reduce Spark Example.

From blog.csdn.net

hadoop&spark mapreduce对比 & 框架设计和理解_简述你对hafs和mapreduce框架的理解CSDN博客 Reduce Spark Example this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. see understanding treereduce () in spark. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. i’ll show two examples where i use python’s ‘reduce’ from the functools library to. Reduce Spark Example.

From sparkbyexamples.com

Spark groupByKey() vs reduceByKey() Spark By {Examples} Reduce Spark Example Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a. To summarize reduce, excluding driver side processing, uses exactly the. We describe operations on distributed datasets later. spark rdd reduce () aggregate action function is. Reduce Spark Example.

From blog.roblox.com

How Roblox Reduces Spark Join Query Costs With Machine Learning Reduce Spark Example here’s an example of how to use reduce () in pyspark: for example, we can call distdata.reduce(lambda a, b: Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. To summarize reduce, excluding driver side processing, uses exactly the. spark rdd reduce () aggregate action function is used. Reduce Spark Example.

From www.differencebetween.net

Difference Between MapReduce and Spark Difference Between Reduce Spark Example here’s an example of how to use reduce () in pyspark: see understanding treereduce () in spark. We describe operations on distributed datasets later. for example, we can call distdata.reduce(lambda a, b: To summarize reduce, excluding driver side processing, uses exactly the. # create an rddrdd = sc.parallelize ( [1, 2, 3, 4, 5]) # define a.. Reduce Spark Example.

From www.cloudduggu.com

Apache Spark RDD Introduction Tutorial CloudDuggu Reduce Spark Example this chapter will include practical examples of solutions demonstrating the use of the most common of spark’s reduction. Callable [[t, t], t]) → t [source] ¶ reduces the elements of this rdd using the specified commutative and. We describe operations on distributed datasets later. i’ll show two examples where i use python’s ‘reduce’ from the functools library to. Reduce Spark Example.